GBIF Soundscape

Table of Contents

Introduction

Unstructured data such as free text, images, sounds, and other media are less commonly used in biodiversity studies than structured information such as species occurrences. Nevertheless, these sources represent a rich stream of information, some of which is not generally available through more conventional sources. This information is of value for scientific, research, and conservation management use, as well as for communication and outreach to public and other audiences.

A relatively recent development in the GBIF portal and API is the registration of media (sound, images, or video) in occurrence records. Here, we use this facility to reconstruct the "soundscapes" of particular regions by compiling the bird and frog sounds from those regions.

Source code

We do the data manipulation in R, using the rgbif package to interact with GBIF. The source code is available here. Note for users not familiar with R: if you get errors saying "there is no package called blah" then you just need install the package: install.packages("blah")

Data processing

Our aim is to find the sounds associated with the birds and frogs present in a region of interest. Ideally we could just search for occurrence records from our region of interest that also have associated media files. Unfortunately, though, most occurrence records are simple observations without associated media.

Our strategy instead is to use a general occurrence search to discover the taxa that are present in our region of interest, and then a secondary search for sound files for those same taxa (regardless of location). These media will almost certainly have been recorded at locations other than our region of interest, but we assume that a given species makes the same sounds worldwide — note that this might not necessarily always be the case.

Since there aren't very many occurrences of birds and frogs with sound media, the easiest approach is just to grab the lot, cache them locally, and filter them later according to other criteria. This also means that we only need to hit the GBIF servers once for this part of the processing.

We choose a region of interest and find out which bird and frog taxa occur there, then intersect that list of taxa with the list for which we have sound media. One media item per taxon is used, and we also retrieve an image for each taxon to use in the web interface.

For data-rich regions, we can potentially apply additional filters according to the attributes of the data — for example, by time of year, allowing seasonally-varying results (as with the example for Bavaria given here).

The processed data are summarised into a JSON-formatted file to be used by the web interface, including the appropriate citation details for each image and audio file.

User interface

The web interface is written in javascript, using HTML5 audio elements. Leaflet provides the mapping functionality on an OpenStreetMap base layer, with Bootstrap, Handlebars and Isotope handling the layout.

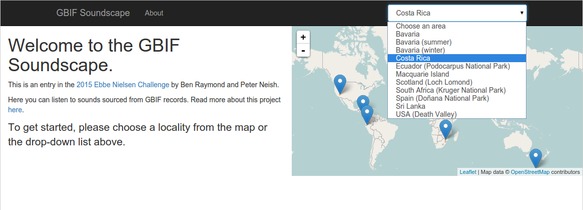

A set of pre-processed regions are available for the user to select, either from the map or from a drop-down list:

|

| Selecting a region of interest |

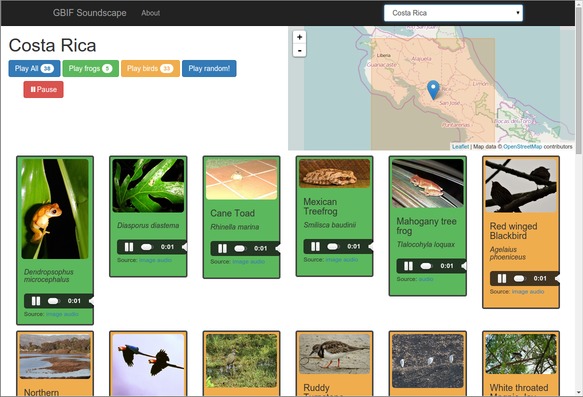

On selecting a region, the map zooms to the region extent (i.e. the spatial extent used to define the species list for the region). Each of the taxa from the region are shown with their image and an individual play/pause control. Global audio controls also allow the user to play or stop all sounds, choose birds or frogs only, or ask for a random selection to be played:

|

| Selected region with species and audio controls shown |

The number of sounds being played simultaneously is limited, so as not to turn the soundscape into an overwhelming racket. This also helps to avoid crashing the browser, which seems to be a risk when playing many audio files at once.

A copy of the interface has been included in the submission. Use Firefox if running from a local copy: Chrome doesn't allow access to local json files with Ajax.

The live version of the web interface can be accessed here, and the source code is available.

Limitations and biases

The results are limited by both the survey effort in the region of interest (i.e. how complete is the species list that we obtain for the region?) as well as the coverage of those taxa in terms of audio media.

Filtering and subsetting the results by attributes might fairly quickly reach a point where data coverage affects the results. For example, the seasonal species lists for Bavaria include 76 taxa that were found to be present in summer but not in winter, and 21 taxa that were found to be present in winter but not in summer. Some of these differences could be explained simply by survey effort, while others will reflect genuine season patterns of occupancy (e.g. the winter-only list includes the gull Larus marinus, which presumably could be migrating from far northern Europe to more temperate areas in winter).

Further work

Data processing

The sound component of video media could be used to supplement the available audio files, although we didn't do it here.

Some sound media contain human voices, which strongly detract from the overall results. We excluded such audio clips here by avoiding all media from any provider that tended to have voices present — rather a brutal approach! A more elegant solution would be to use some digital signal processing to identify periods of unwanted sounds (e.g. human voices, traffic noise). Periods of silence could also be removed, reducing long recordings down to shorter snippets of still-relevant audio.

User interface

The demonstration interface provides sounds and images for a number of pre-computed regions. A more flexible interface that allows the user to select an arbitrary region of interest would be possible, but would likely require a faster means of retrieving a list of species given a spatial and/or other query (e.g. something like the ALA's occurrence facet search, which isn't immediately obvious how to do with the current GBIF API).

Notes on our experiences with sound media from GBIF

It's worth noting that, at the time of writing, other sound media were available through GBIF, but were not returned when the mediatype was specified. For example, we can specifically query the Macaulay Library sound archive in GBIF by specifying its datasetKey. Asking for Macaulay records with sound media:

occ_search(taxonKey=212, mediatype='Sound', limit=10, datasetKey="7f6dd0f7-9ed4-49c0-bb71-b2a9c7fed9f1")

returned no results. But without specifying the mediatype:

occ_search(taxonKey=212, limit=10, datasetKey="7f6dd0f7-9ed4-49c0-bb71-b2a9c7fed9f1")

did return records. Some extra processing would then needed to download each associated web page (in the references attribute of each data record) and extract the audio file link within it (we didn't do this here).