Timeouts in beach volleyball

2018-10-03

Ben Raymond, Alexis Lebedew, Chau Le

(See also Alexis’ summary of this study).

Updated April 2019 to correct the statement about the interpretation of confidence intervals.

Introduction

Timeouts in volleyball are commonly called when the serving team is on a run of points, with the conventional wisdom suggesting that calling a timeout will help to break that run. However, previous analyses of high-level men’s indoor volleyball have found that timeouts are not associated with an increase in sideout percentage, casting some doubt on that conventional wisdom.

Here, we examine a large beach volleyball dataset to see if similar results hold in the beach format of the game.

Acknowledgement: Many thanks to Volleyball Australia for their generous collaboration — this analysis would not have been possible without their support, and in particular without access to the data.

Data summary

The data set consists of beach volleyball matches from World Tour events, Commonwealth Games, Asian Volleyball Confederation, Oceania championships, National Tour, and other high level competitions from 2011–2018. A quick data summary:

| Number of matches | Number of mens matches | Number of womens matches | Number of sets | Number of timeouts | Number of technical timeouts | Number of injury timeouts |

|---|---|---|---|---|---|---|

| 1298 | 792 | 506 | 2728 | 3742 | 2208 | 41 |

Timeout calling patterns

We are interested in what happens around timeouts, so let’s first look at when timeouts occur. Technical timeouts should happen when the sum of the two team’s scores is 21. Let’s check that:

| Score sum | N |

|---|---|

| 20 | 1 |

| 21 | 2205 |

| 22 | 1 |

| 28 | 1 |

This is indeed the case. There are a small number of errors in the database (either injury/regular timeouts incorrectly marked as TTOs, or incorrect scores). We’ll exclude these matches from the analysis.

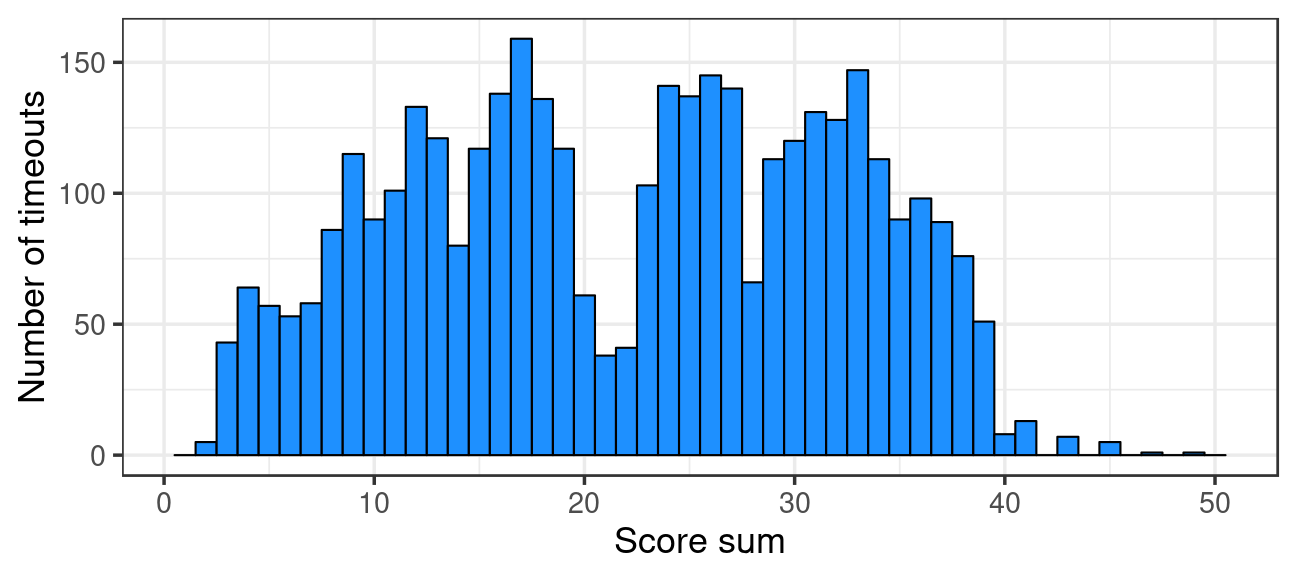

We can similarly look at regular timeouts, and the sum of the team scores when these are called:

As one would expect, there are far fewer timeouts called when the sum of the team scores is around 21, because technical timeouts occur then.

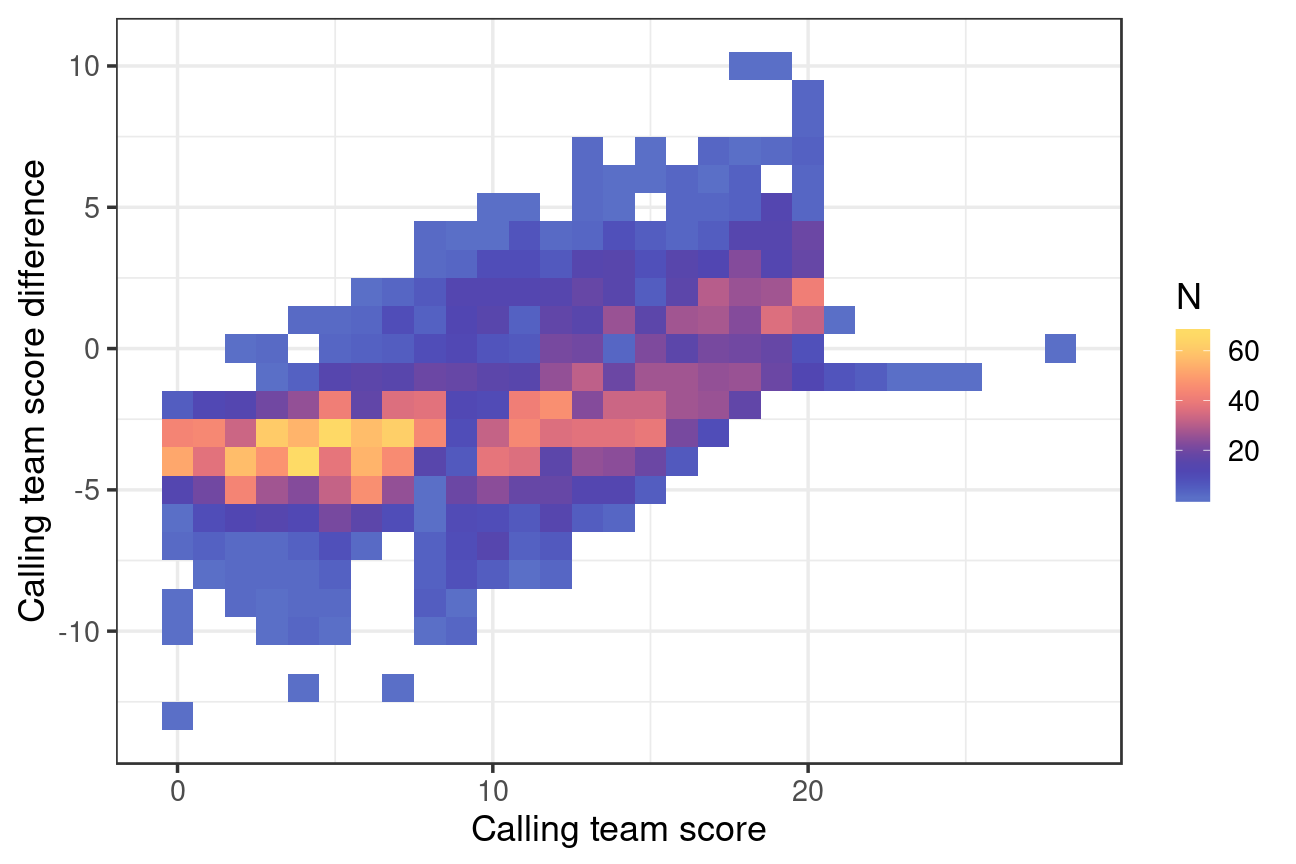

Now let’s look at a count of the number of regular timeouts, according to the calling team’s score and the score difference:

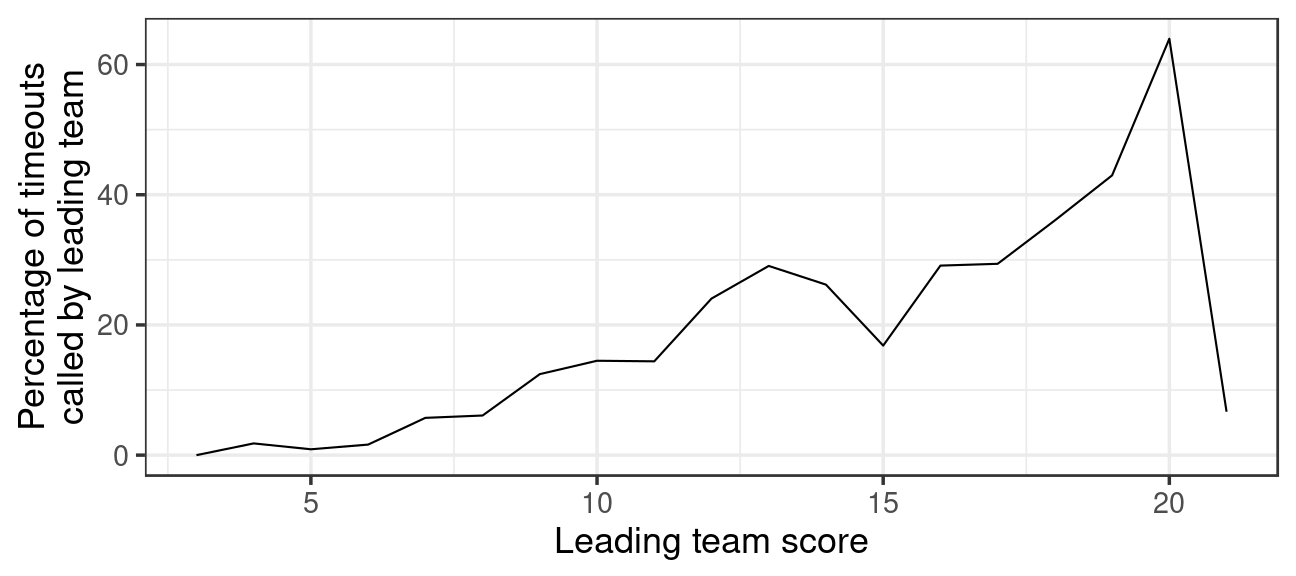

There is a hotspot of timeouts called when the team score is less than 10 and the score difference is negative (i.e. the team is trailing). Generally, timeouts are called by the trailing team but there are also some timeouts called later in the set by the team that is leading. In fact towards the end of the set, the majority of timeouts are called by the leading team:

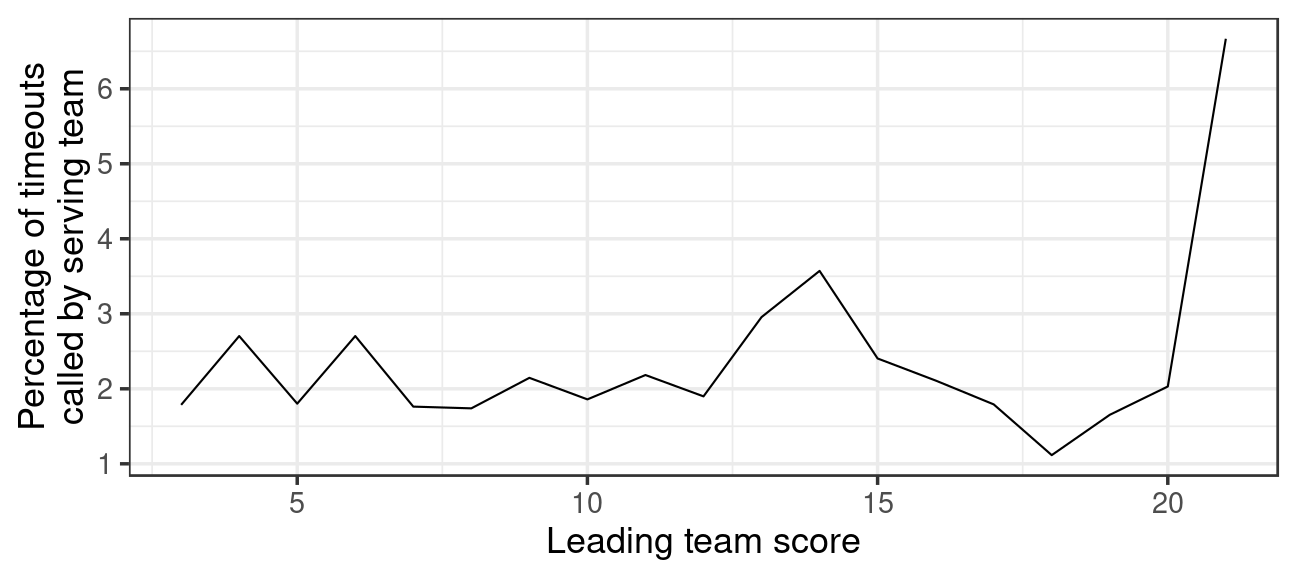

Timeouts are almost always called by the receiving team. Only 2.1% of timeouts are called by the serving team.

Overall, these patterns are comparable to the timeout calling patterns for indoor volleyball.

Sideout after timeouts

At the most basic level, we can do a simple calculation of sideout percentage after different timeout types and compare to sideout percentage after no timeout:

| Timeout type | Sideout % |

|---|---|

| Injury timeout | 70.7 |

| No timeout | 64.9 |

| Technical timeout | 65.0 |

| Timeout | 64.0 |

This suggests that, except perhaps for injury timeouts, there is little difference in overall sideout percentage following timeout, technical timeout, or no timeout. (There are very few injury timeouts, only 41 in total, so it’s difficult to say much about these.)

Let’s break it down a little further. There are several factors that could plausibly be important for sideout/timeout success. We have already seen that timeouts are pretty much always called by the receiving team, and that early in the set timeouts tend to be called by the team that is trailing, but late in the set by the team that is leading. Hence, the score margin (the difference between the score of the calling team and the score of the other team) could be important. How far through the set we are might also matter because timeouts called late in the set might be called for different reasons to those called early in the set. And finally the serve run length preceding a timeout might matter. Timeouts are called by the receiving team, typically in order to break a run of serves by the other team. The serve run length indicates how many points in a row the serving team won before the timeout was called.

We’ll first look at these factors separately.

From here onwards, we will only consider regular timeouts (not technical or injury timeouts), and only timeouts called by the receiving team.

Timeouts by score margin and progress through set

Let’s examine sideout percentage as a function of the receiving team’s score margin (with negative values indicating that the receiving team is behind), and the leading team’s score (to indicate how far through the set we are).

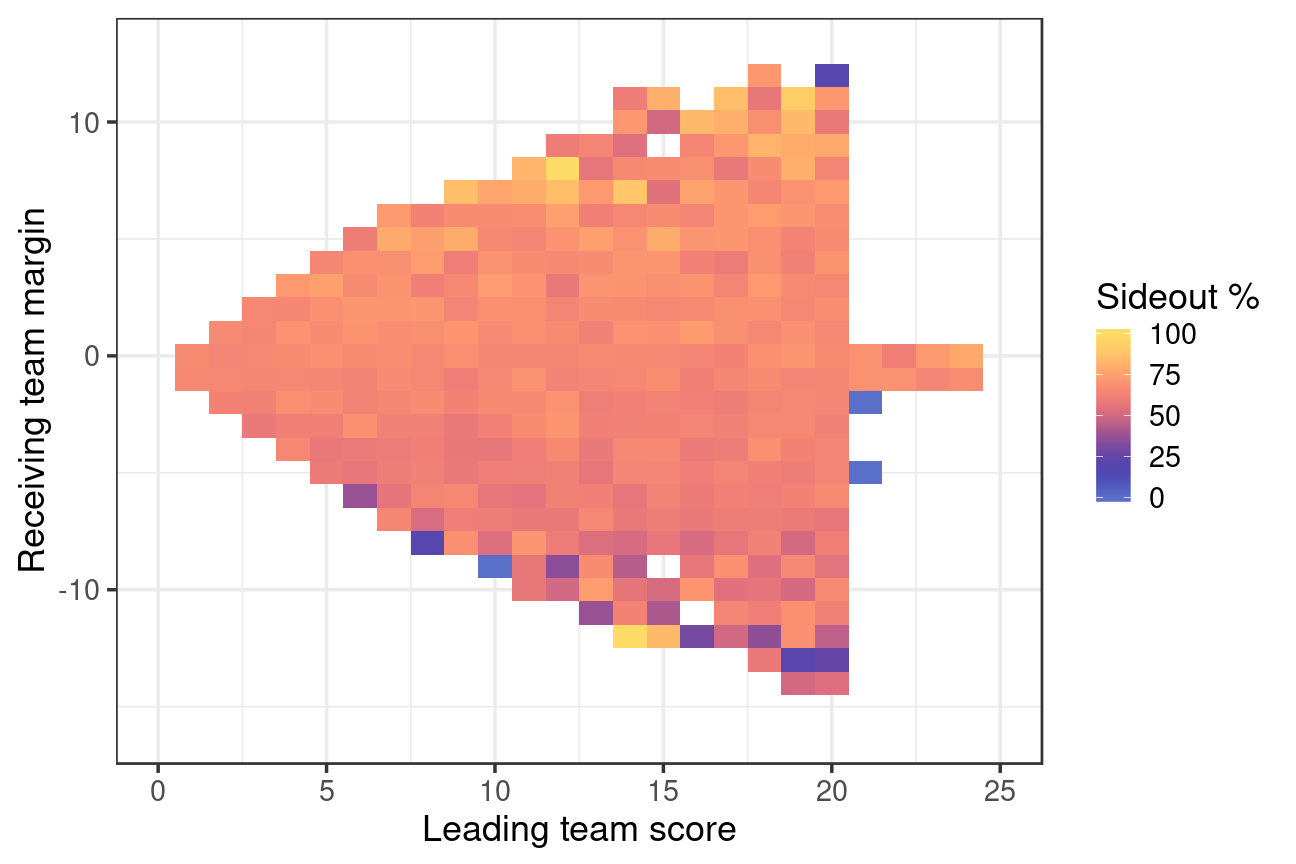

First, sideout percentage during regular points (i.e. not after timeout):

As one would expect, when the receiving team is leading the sideout percentage is generally higher than when the receiving team is trailing.

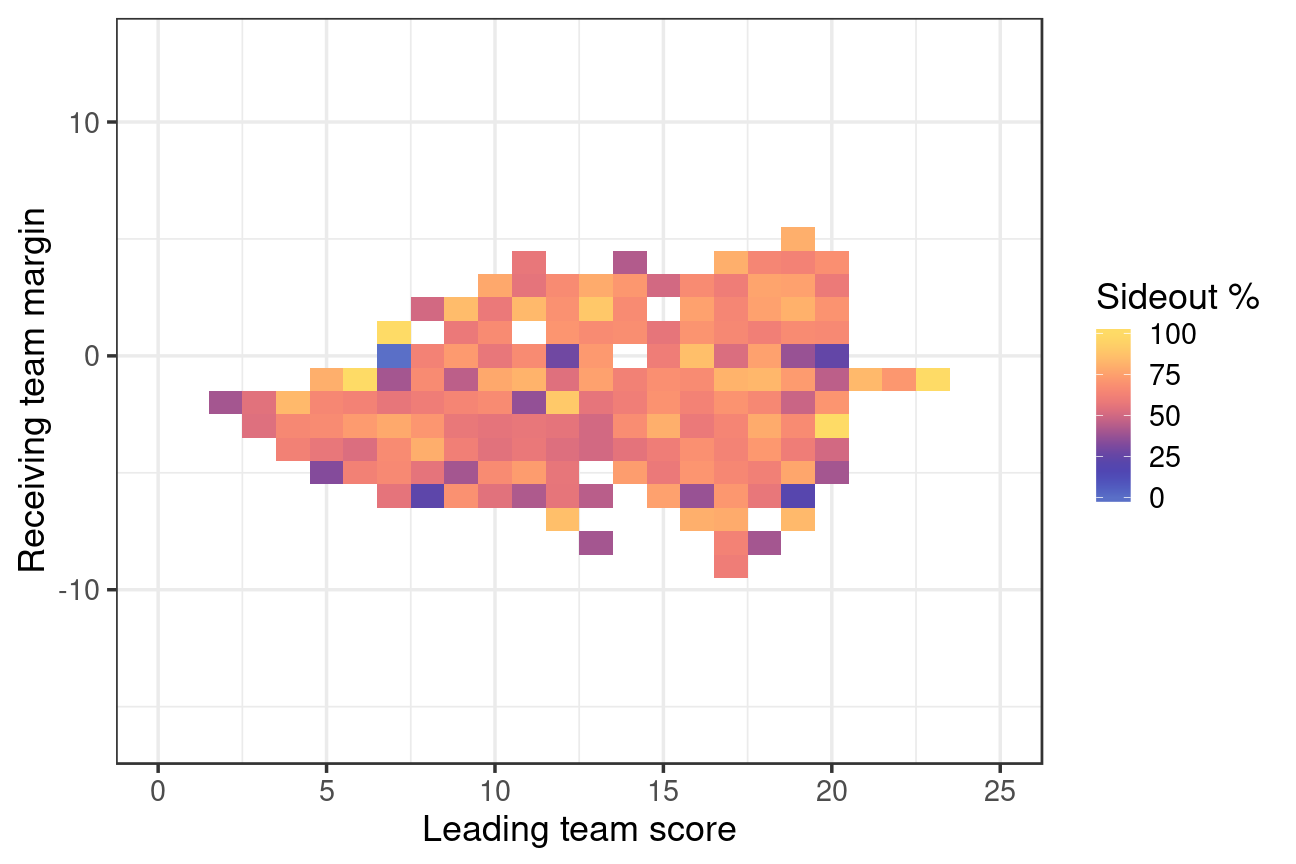

And the same plot but for points following timeout:

The region of data covered by this plot is smaller than the region of the previous plot, because this plot uses only data from after-timeout points. Those points don’t cover the same range of score combinations that non-timeout points do. The pattern in this plot is not quite as clear as the previous plot, at least partly because there are fewer data after timeout than non-timeout.

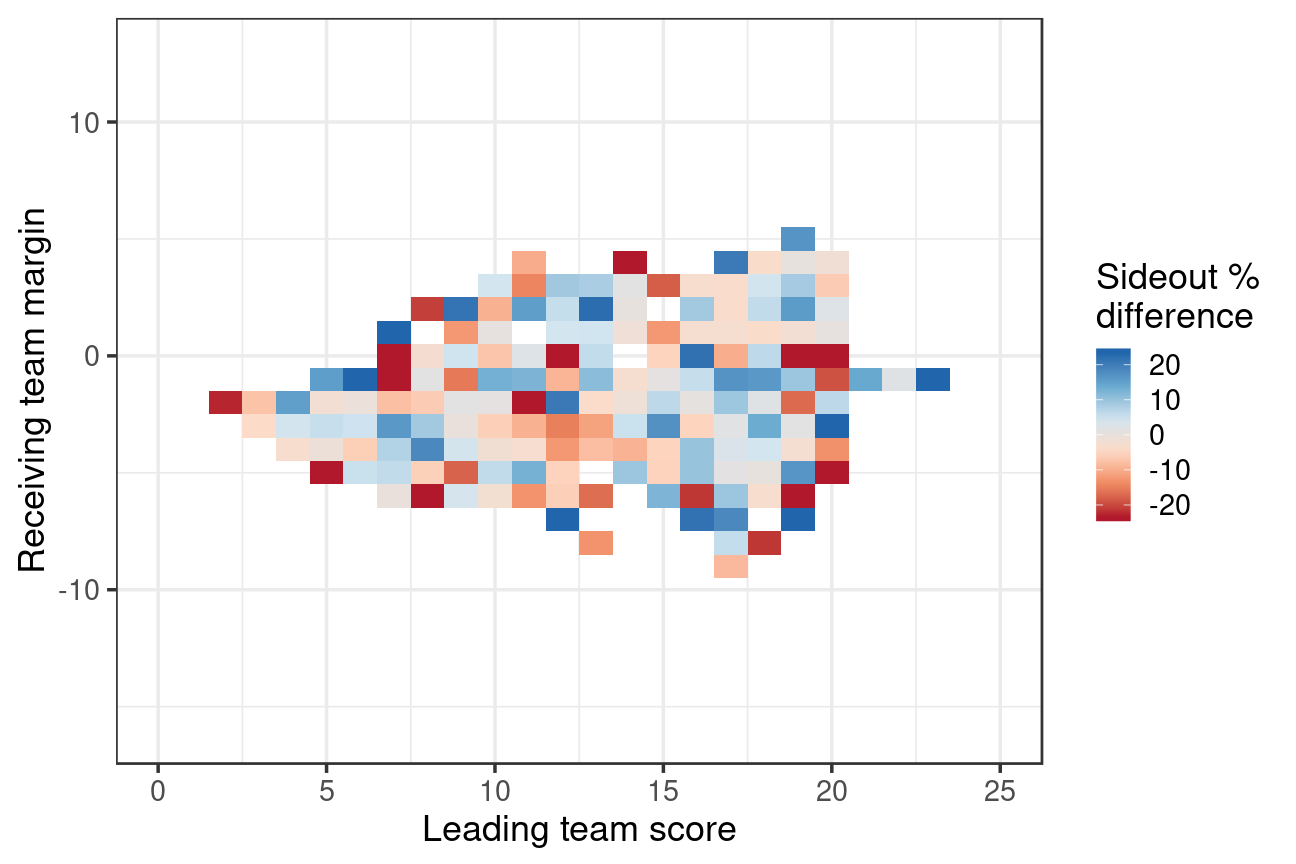

We can compare the sideout percentage after non-timeout to the sideout percentage after timeout, by subtracting the second plot’s data from the first:

There doesn’t seem to be an overwhelmingly clear pattern here to suggest that sideout percentage after timeout is consistently different to non-timeout points. (If there was a consistent difference, we would expect to see smooth gradations in colour, rather than a patchwork of blue and red.)

Serve runs

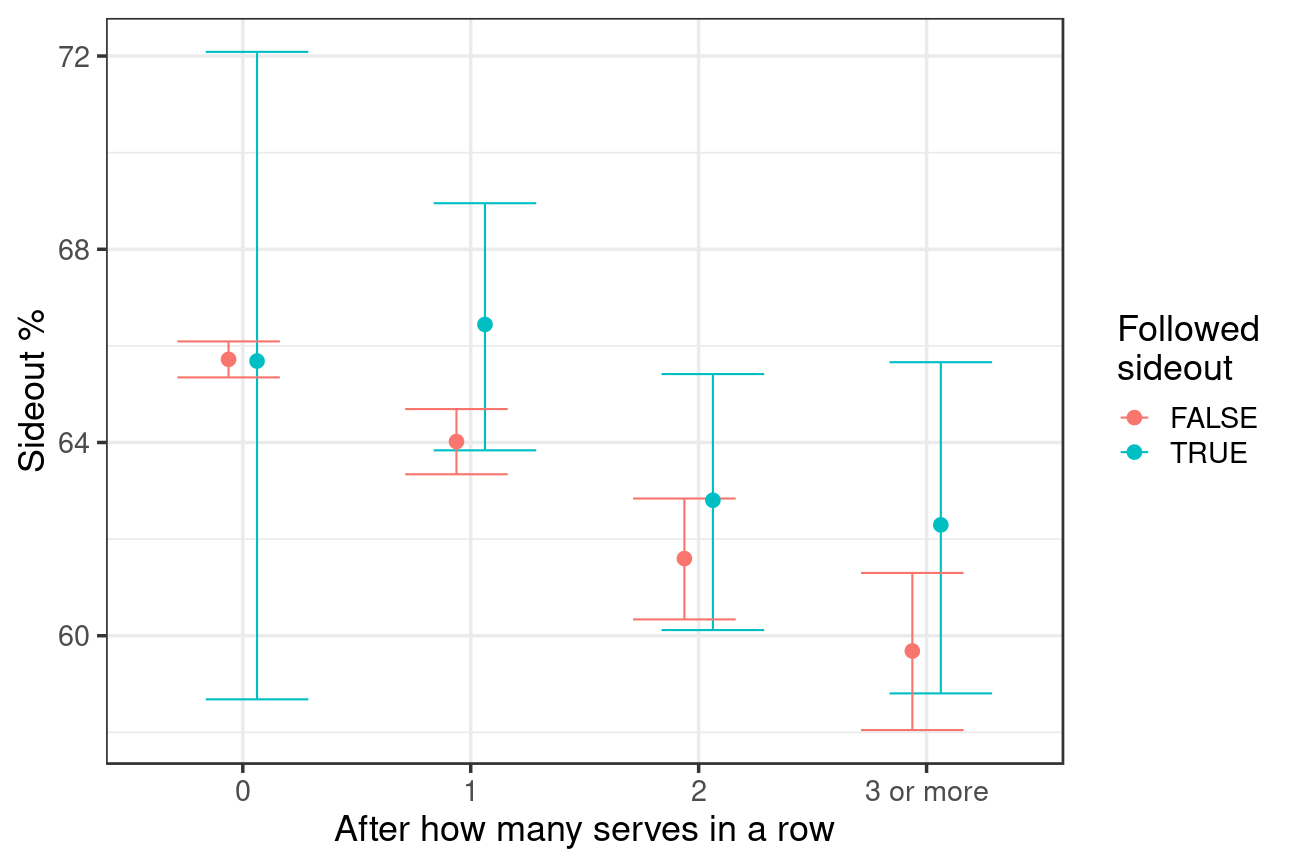

Timeouts are almost always called by the receiving team, so the question we are asking is, “Does the position in the serve run (i.e. how many points in a row the serving team won before the timeout was called) affect sideout success after timeout?” The raw data:

The dots in this graph show the sideout percentage calculated directly from the data. The vertical bars show the confidence intervals on those percentages. These confidence intervals tell us about the level of confidence that we have on the calculated percentages. (More precisely, we are assuming that our data is a sample from a larger population of volleyball matches, and if we were to sample more data from new matches, the true sideout percentages from that population would lie within these intervals 95% of the time). The confidence intervals are narrower for the red bars because we have more data from non-timeout points, and so we can be more confident about these estimates.

The point of showing the confidence intervals is this: where there is no overlap between the red and blue bars it means that there is a significant difference between sideout percentages with/without timeout. Where there is overlap (i.e. any part of a red bar overlaps with any part of its corresponding blue bar) the situation is less clear: there can still be a significant difference with slightly-overlapping bars, but we have to do a formal statistical test to be sure. But in general, if the bars are heavily overlapping, it means that we aren’t confident that there is a difference between sideout percentages with/without timeout. The true (population) sideout percentage might lie within that overlap zone for both red and blue, and in that case there would not be a difference between them.

Our conclusion from this plot is that there isn’t strong evidence that timeouts are associated with a difference in sideout rate, even when accounting for the effects of serve run length. There is a possibility that sideout percentage after one serve might be different after timeout, but the effect is not clear-cut in the raw data (the bars almost don’t overlap). But rather than delve further into the effect of serve run length alone, let’s proceed to looking at multiple factors acting together.

Putting it together

Finally, we put this all together by fitting a model of sideout success. The model is a binomial generalized additive model, and depends on four predictors:

- the receiving team’s score margin (i.e. whether the receiving team is ahead or behind, and by how far)

- the leading team’s score (how far through the set we are)

- how many serves in a row there were, before this point (the serve run)

- whether this point follows a timeout or not.

(Remember, we are only considering regular timeouts called by the receiving team.)

We do this modelling step because it allows us to simultaneously account for the effects of score margin, leading team score, serve run length, and timeout. This might reveal interactions that are not apparent from looking at those factors in isolation from each other, as we did above.

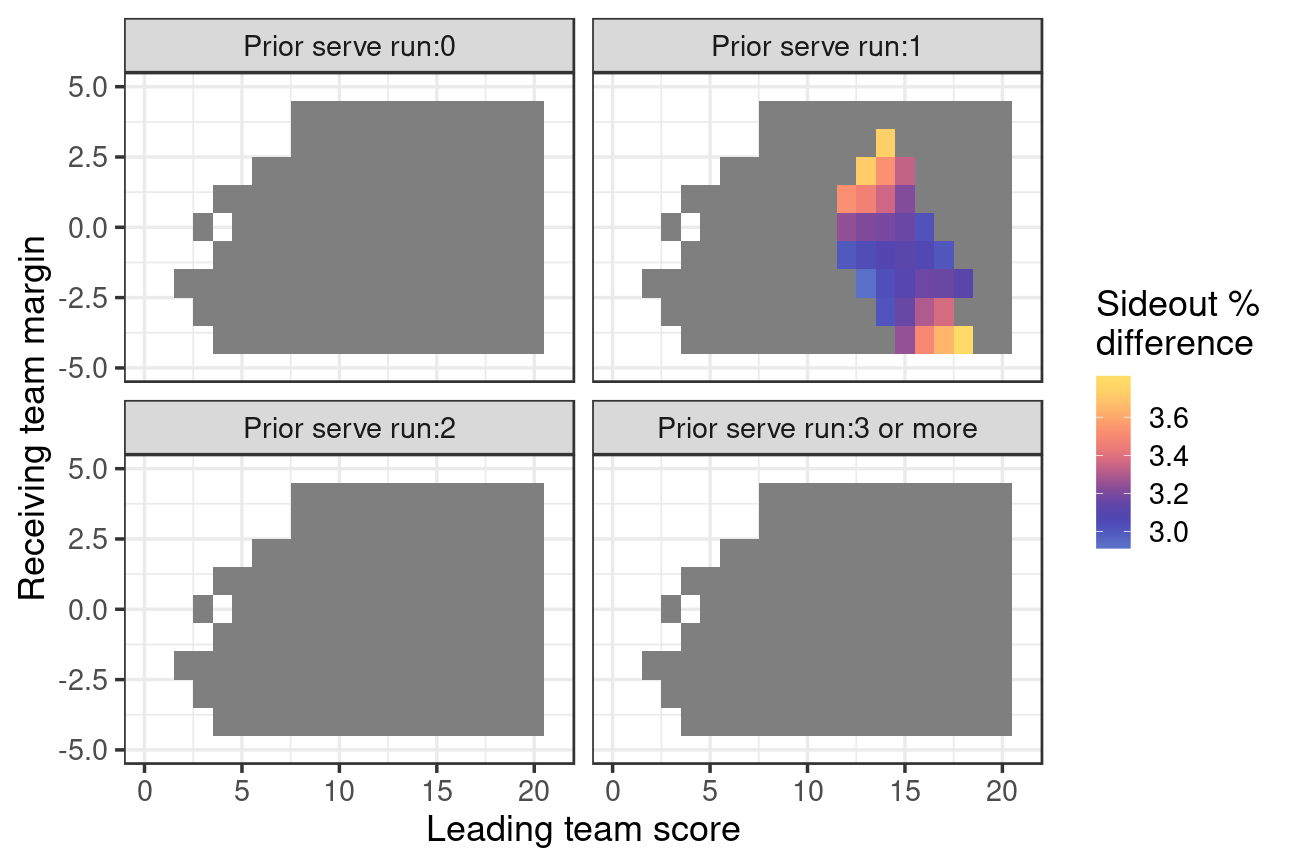

We plot the fitted model predictions below. The values (colours) show the difference in sideout percentage after timeout, compared to regular points. White areas on the plots are where we have no data. Grey areas are those where we have data, but the fitted model results are not significantly different from zero. That is, we are only showing the conditions under which the model indicates that a timeout is associated with a difference in sideout percentage.

The model indicates that timeouts are not associated with any difference in sideout rate, except under a specific set of conditions:

- after the first serve of a run (prior serve run length = 1)

- and in the mid-to-latter part of the set (leading team score = 12–18).

Under these conditions, calling a timeout was associated with an increase in sideout percentage from 64.3% to 67.5%, i.e. an increase of 3.3%.

Let’s put that mean sideout effect size of 3.3% into perspective: if we were to call 30 timeouts (on a serve run of 1 and the leading team score of 12–18), we would expect on average to get one extra sideout compared to calling no timeouts. Of all the matches in our data, 56% of sets include at least one point in our “sweet spot” (on a serve run of 1 and the leading team score of 12–18). Thus, if a timeout was called in each set where this opportunity arose, it would take around 53 sets to see our one extra expected sideout compared to calling no timeouts.

Differences by competition level

Our data set comprises matches from competitions of different levels: at the highest level we have World Tour events, whereas Asian Volleyball Confederation, National Tour, and Commonwealth Games events would generally be considered to be of slightly lower standard.

As a final step, let’s examine those two tiers of competition separately. For each, we present only the data summary (so that the numbers of matches, etc, can be seen) and the final model-output figure.

Top level competition

For “top level” we include only matches from World Tour events.

| Number of matches | Number of mens matches | Number of womens matches | Number of sets | Number of timeouts | Number of technical timeouts | Number of injury timeouts |

|---|---|---|---|---|---|---|

| 918 | 504 | 414 | 1982 | 2733 | 1590 | 24 |

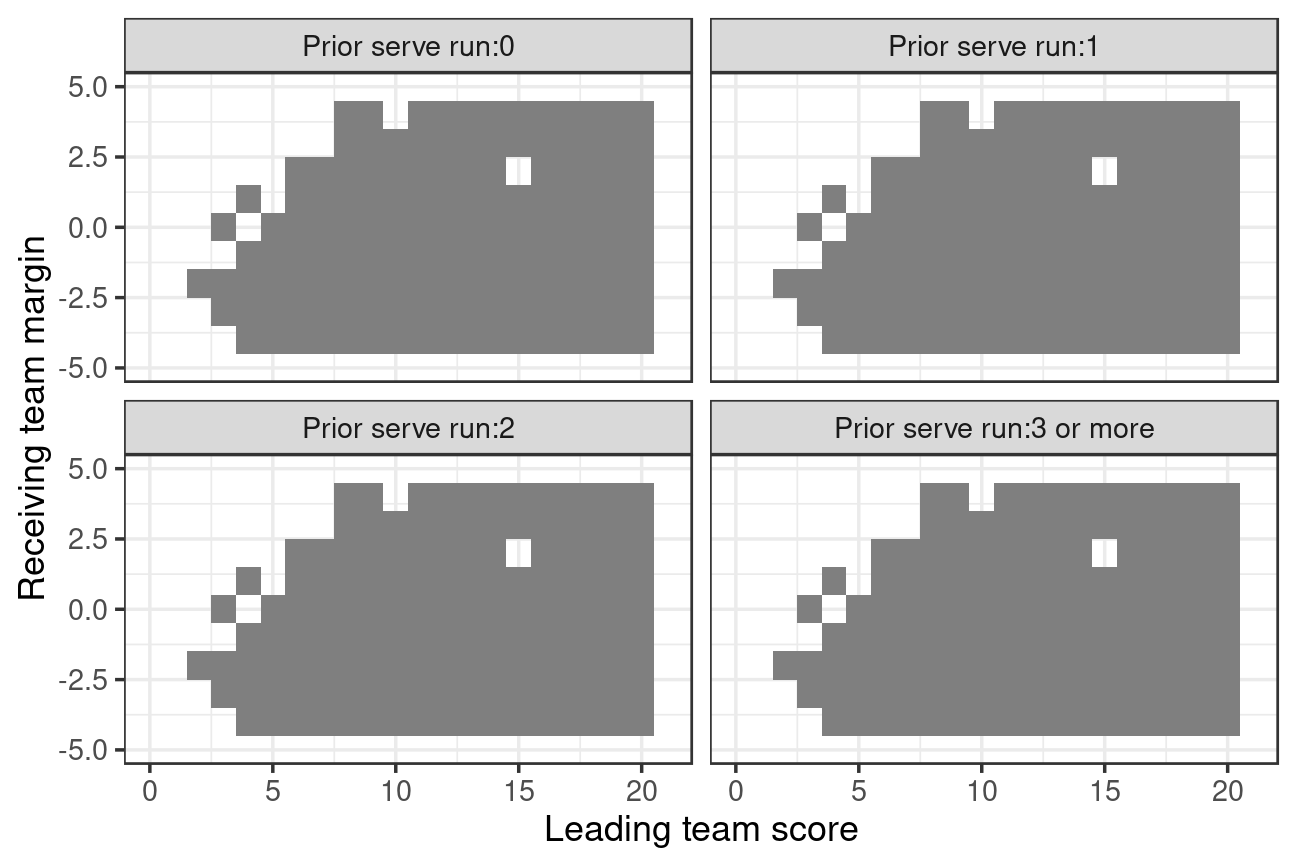

As the above figure indicates, for these top-level matches there was no association between timeout and a difference in sideout percentage.

Second-level competitions

Events other than World Tour events (National Tour, Asian Volleyball Confederation, and similar events).

| Number of matches | Number of mens matches | Number of womens matches | Number of sets | Number of timeouts | Number of technical timeouts | Number of injury timeouts |

|---|---|---|---|---|---|---|

| 377 | 286 | 91 | 742 | 1005 | 612 | 17 |

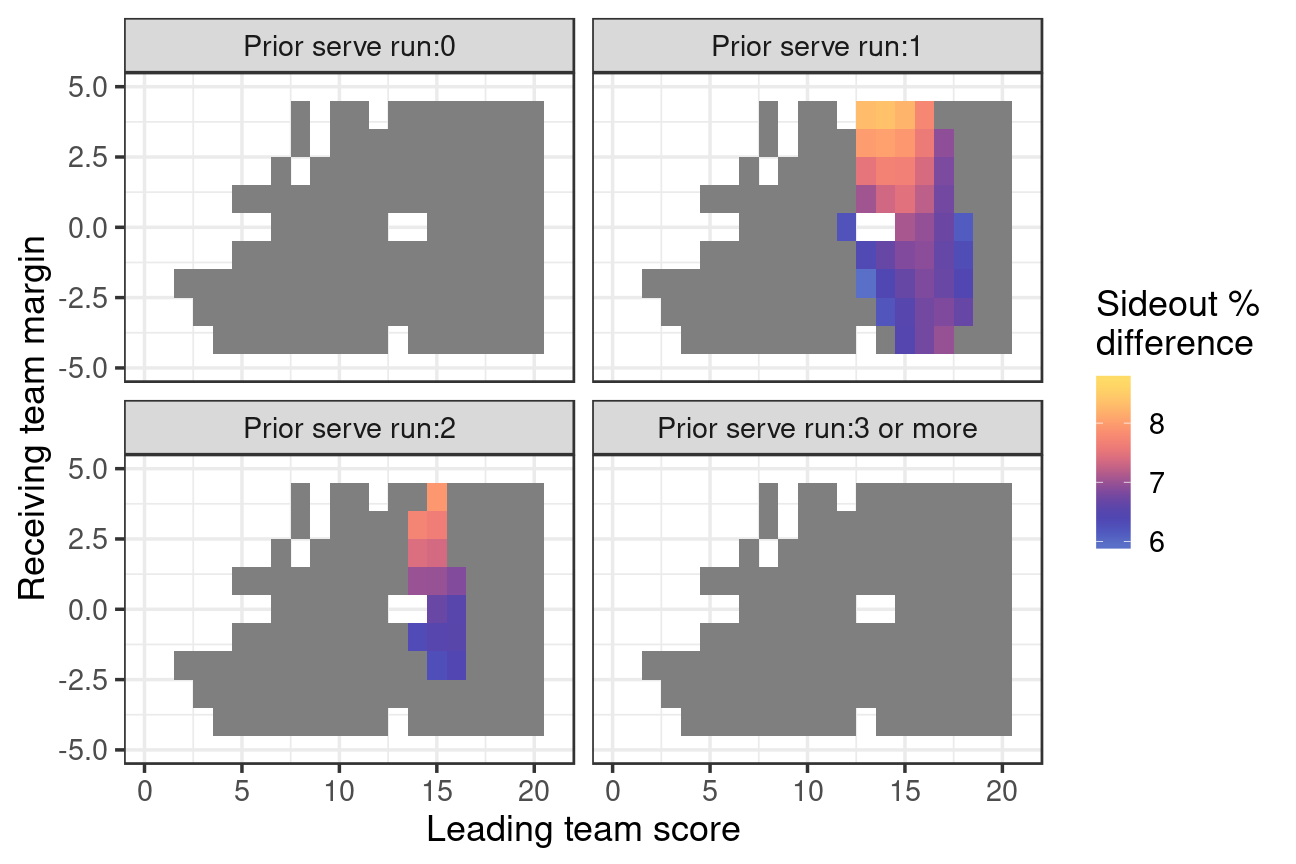

We are working with a relatively small subset of matches here (377 matches, with a bias towards men’s competitions). Nevertheless, the model results suggest that for these second-level matches, there was a slightly stronger effect of timeout than we saw with the complete data set. Timeout was again associated with an increase in sideout percentage in the mid-to-latter stages of a set, but this time on both the second and third serves of a run. The increase in sideout percentage was from 62.0% to 69.1%, i.e. an increase of 7.0%.

So there seems to be an effect by competition level, with no evidence that sideout percentage varies after timeout at the highest level of competition, but some evidence that it does (under particular circumstances) at the less elite level.